The Needy Robot

- yz7720

- Nov 17, 2021

- 2 min read

The original idea we (Tuan, Sarah and me)have for this interactive project is to have something when a participant approaches a camera their photo is taken, and as they move further away from the camera their image becomes increasingly pixelated/blurry.

When we did the first play test for this project, we told the participants to imagine they have just entered an art gallery or exhibition space. In order to encourage the participants to approach the installation and have their photo taken, we used tape on the floor indicating a large ‘X’ for where to stand in order to direct our participants to the laptop. The laptop was sitting on a desk at the front of the room.By having the computer and the sensor exposed to audiences, we noticed that most of our participants tend to wave their hands in front of the sensors, and looks like trying to make a connection with the computer, waving to say hi to the computation world.

That's when we decided to create a Robot, to bring the computer to life and interact with us physically. The shape of the Robot was inspired by the following picture from a Japanese animation. We adapted that clean design and developed into a new shape that could encompass our Arduino set, sensors and a whole lap top.

The final version of project is when you approaches the robot, you see his "heart" displaying. There is a photocell hiding inside his left hand, with a heart "target" drawing on his palm. When you "high five" with the robot, or touches the heart of the target, the photocell sends signals to P5.js, a photo of you will be taken. Then if you step back, you will notice the photo will become pixelated, and the pixelation exaggerates when you move further away. If you move out of the ultrasonic sensor range, you will see the robot's broken heart displaying.

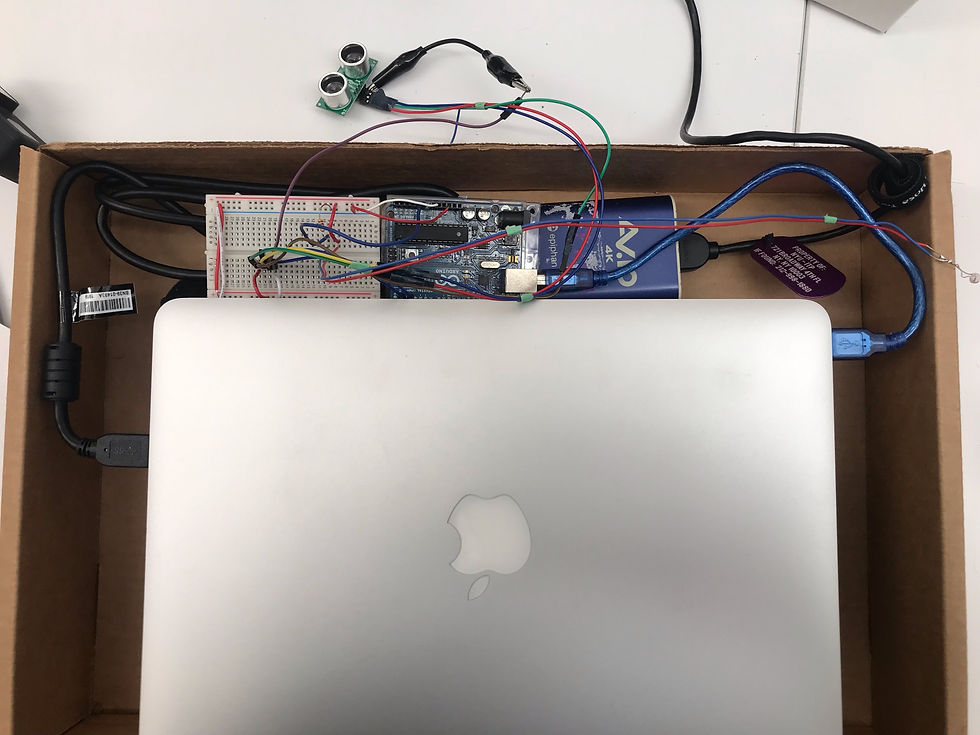

From the physical computing perspective, there are only 2 sensors (photocell & ultrasonic sensor) involved and the Arduino code is fairly easy and simple compare to the P5.js code.

Tuan had revised a several versions of the P5.js code for the final one to work. So the code starts with the red heard as a emoji text written in P5, and when someone covers the photocell, camera will be shown and a snapshots will be taken, pixels start to draw from reading the value from ultrasonic sensor.

In order to avoid cutting a hole for the computer's camera, we have connected the GoPro as the external camera, and here is the code for selecting the GoPro as the camera in P5.js.

The fabrication of the robot also took a lot more time than we expected. There are a lot of hole cutting involved in order to hide the connecting lines. We also built a platform for the GoPro to stay at a height so it looks like it's part of the robot's eye.

Comments